Artificial Intelligence (AI) has become a fundamental aspect of contemporary civilisation, impacting everything from criminal justice and healthcare to employment decisions. But worries about prejudice and fairness have also surfaced as AI systems become more potent. Unfair treatment of particular groups due to bias in AI can exacerbate social injustices and erode confidence in AI systems. In order to advance equity in AI development, we examine the problems associated with AI bias in this blog.

Recognising AI Bias

When an AI system generates consistently biassed findings as a result of incorrect presumptions made during data gathering, algorithm design, or implementation, this is known as AI bias. At different phases of AI development, bias can emerge and produce discriminating results.

AI Bias Types:

InformationData Bias: AI systems are trained on historical data, which may be biassed by society. AI may produce distorted predictions if the training data is not representative.

Algorithmic Bias: Because of errors in their data processing or decision-making, some AI algorithms may give preference to particular groups.

Selection Bias: The AI model may not function fairly across various demographics if the training dataset lacks varied populations.

Human Bias: By selecting features and assigning labels, the people creating AI systems may inadvertently add biases.

Difficulties in Maintaining AI Equity

Absence of Diverse Training Data: AI systems that have been trained on homogeneous datasets find it difficult to generalise effectively to a variety of user groups.

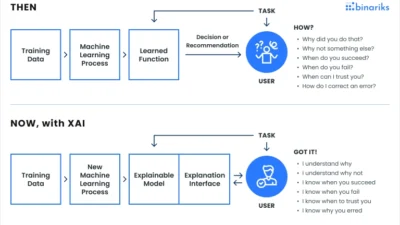

AI Model Opacity: A lot of AI systems,particularly deep learning models,act as “black boxes,” making it challenging to recognise and address prejudices.

Unintentional Reinforcement of Discrimination: By reproducing prejudices seen in historical data, AI may make already-existing social injustices worse.

Ethical Conundrums: It might be difficult to define fairness in AI since it can mean different things depending on the situation (e.g., equal opportunity vs. equal outcomes).

Regulatory Gaps: There is little control over prejudice and fairness in many AI applications, which operate in legal limbo.

Solutions for Fairness and Bias in AI

- Enhancing Diversity and Quality of Data

Make sure the datasets are representative of various demographic groups and are varied.

To get rid of historical biases, audit and update datasets on a regular basis.

In order to balance under-represented groups, use synthetic data augmentation approaches.

- Explainability and Algorithmic Transparency

Create interpretable AI models to comprehend how decisions are made.

Install a system that considers fairness.learning strategies to reduce skewed forecasts.

To increase the transparency of AI judgements, apply explainable AI (XAI) techniques.

- Methods for Identifying and Reducing Bias

Use statistical fairness metrics (such as disparate impact analysis) to conduct bias audits.

Adjust AI models by applying adversarial debiasing approaches.

When training the model, apply fairness restrictions.

- Frameworks and Regulations for Ethical AI

Establish moral principles and industry norms for the development of AI.

Support governmental initiatives to control AI systems’ fairness.

Encourage cooperation between ethicists, policymakers, and AI researchers.

- Inclusive AI Development and Human Oversight

When designing and developing AI models, make sure different teams are included.

Continue to use human-in-the-loop systems for important decision-making.

Talk to impacted communities to learn about their issues and viewpoints.

Fair AI’s Future

Making sure AI is fair isa persistent issue that calls for constant advancements in algorithm design, data collection, and regulatory regulations. Addressing prejudice will be essential to creating moral and reliable AI systems as AI continues to influence many facets of life. We can develop AI that benefits all of humankind equally if we put fairness, transparency, and inclusivity first.

In conclusion

AI bias is a serious problem that necessitates preventative action to guarantee equity and stop prejudice. We can create AI systems that are strong and equitable by tackling data biases, enhancing transparency, putting mitigation strategies into practice, and supporting moral AI frameworks. Developing AI technologies that benefit everyone equally is the duty of AI developers, legislators, and society at large.

How do you feel about the fairness of AI? Post your thouthe remarks that follow!