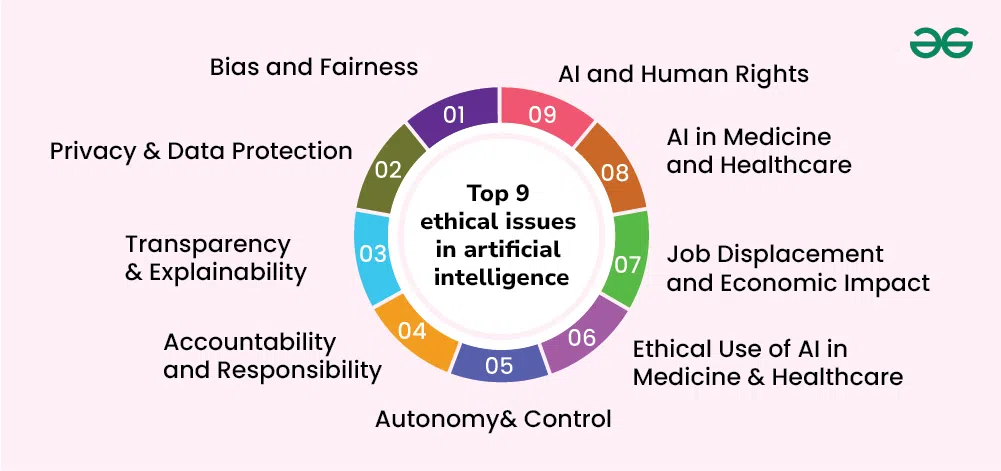

The development of artificial intelligence (AI) raises a number of ethical issues that need to be resolved for its responsible and equitable application. AI has the potential to revolutionise a variety of sectors, including healthcare and finance, but it also brings up issues with bias, privacy, responsibility, and transparency.

AI’s Bias and Fairness

Bias is one of the biggest ethical problems in the development of AI. AI models are trained on past data, which may contain biases that can provide discriminatory results if left unchecked.

Important Concerns About Bias:

Data Bias: AI programs that have been trained on skewed datasets may reinforce current disparities.

Algorithmic Bias: Some AI models might treat some groups unfairly by favouring them over others.

Preserving diversity in order to mitigate biasbias in AI systems can be lessened by using training data and carrying out routine audits.

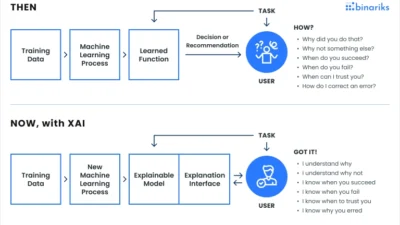

Explainability and Transparency

A lot of AI models, particularly deep learning systems, function as “black boxes,” which makes it challenging to understand how they make decisions.

The Significance of Transparency:

Trust: Stakeholders and users must comprehend how AI makes judgements.

Regulation Compliance: To adhere to ethical and legal requirements, sectors including healthcare and finance need explainable AI.

Auditing and Debugging: Developers can find mistakes and enhance system performance with transparent AI models.

Data Security and Privacy

Large volumes of personal data are frequently used by AI systems, which raises questions regarding data security and user privacy.

Optimal Data Protection Practices:

Anonymisation: To preserve user privacy, personally identifying information is eliminated.

Safe Storage: Putting cybersecurity and encryption into practicesteps to protect data.

- User Consent: Making sure users are aware of the ways in which their data is gathered and utilised.

Responsibility and Accountability

Determining accountability for AI-driven judgements becomes a crucial issue as AI systems get more independent.

Who Makes the AI Decisions?”

- Developers and Companies: Businesses developing AI systems need to make sure that moral standards are adhered to.

Regulators and Governments: Putting laws and regulations in place to monitor AI applications.

End Users: Recognising the limitations of AI and making responsible use of it.

Ethical Development Strategies for AI in the Future

Ethical AI development techniques should be used to guarantee AI helps society while lowering hazards.

Making Progress in Ethical AI:

Ethical AI Guidelines: Developing moral standards for AI development and application.

Ensuring human oversight in crucial AI applications is known as the “Human-in-the-Loop Approach.”

Continuous Monitoring: Consistently assesssingas well as use.

Keeping humans involved in critical AI applications is called the “Human-in-the-Loop Approach.”

Ongoing Surveillance: Regularly